Vision-based Smart Parking System

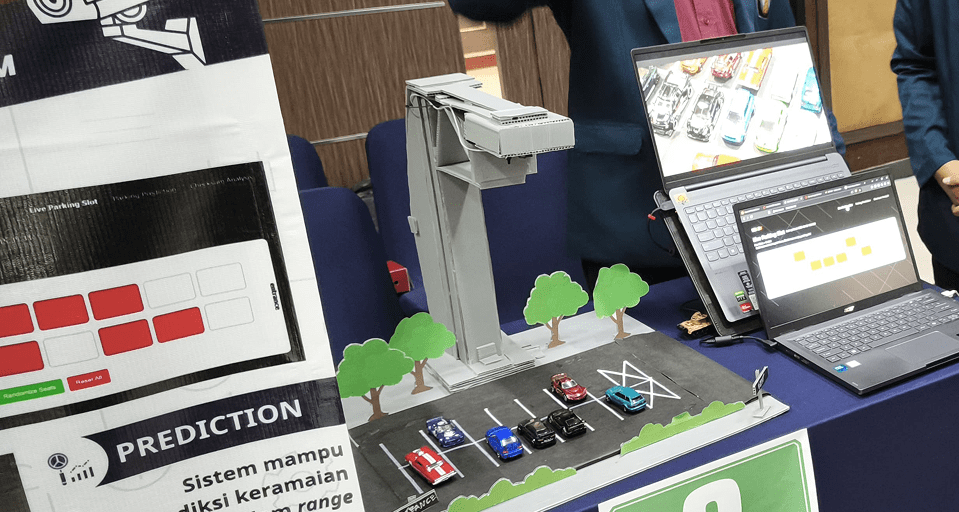

Vision-Based Smart Parking System is a prototype using ESP32-CAM and Edge AI provides real-time slot availability and congestion prediction through image processing, offering a low-cost, sensor-free solution for intelligent campus parking management.

Meet Our Team

Problem Statements

- Students struggle to find parking spots due to the lack of real-time information on available slots.

Existing parking information relies on personal assumptions with unmeasurable accuracy.

Vehicle detection accuracy decreases under varying lighting conditions.

Data transmission from camera to server is prone to errors, threatening data integrity.

Parking managers face difficulty optimizing space usage without access to historical density data.

Goals

- Provide real-time information on vacant parking slots via an interactive map.

- Achieve vehicle detection accuracy of over 95% with latency under 500ms.

- Ensure ESP32-CAM to server data transmission reliability of 99.999%.

- Predict parking congestion based on historical data to optimize land usage.

- Build a responsive web interface accessible across multiple devices.

Prerequisites – Component Preparation

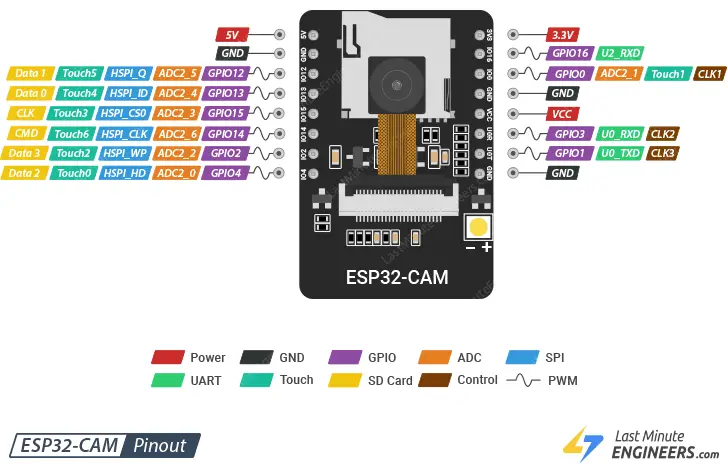

Hardware

- OV5640 Camera: Image acquisition of parking area.

- ESP32-CAM: Image processing and AI inference.

- 2 x 3.7V Battery+ LM7805 Regulator: Portable power supply.

- 3.3µF Capacitor: Voltage stabilization and noise reduction.

Software & Cloud

- Edge Impulse: Model training and export for FOMO MobileNetV2.

- AWS EC2: Hosts backend server and database.

- MySQL (AWS RDS): Stores real-time and historical parking data.

- Laravel: Web interface development framework.

- EON Compiler: Converts .tflite model to C code for ESP32.

Supporting Tools

- Arduino IDE: Program development and deployment to ESP32-CAM.

- Figma: UI/UX design of the web interface.

- LabelIMG: Parking image dataset annotation.

Datasheet ESP32-Cam(Pinout)

Schematics

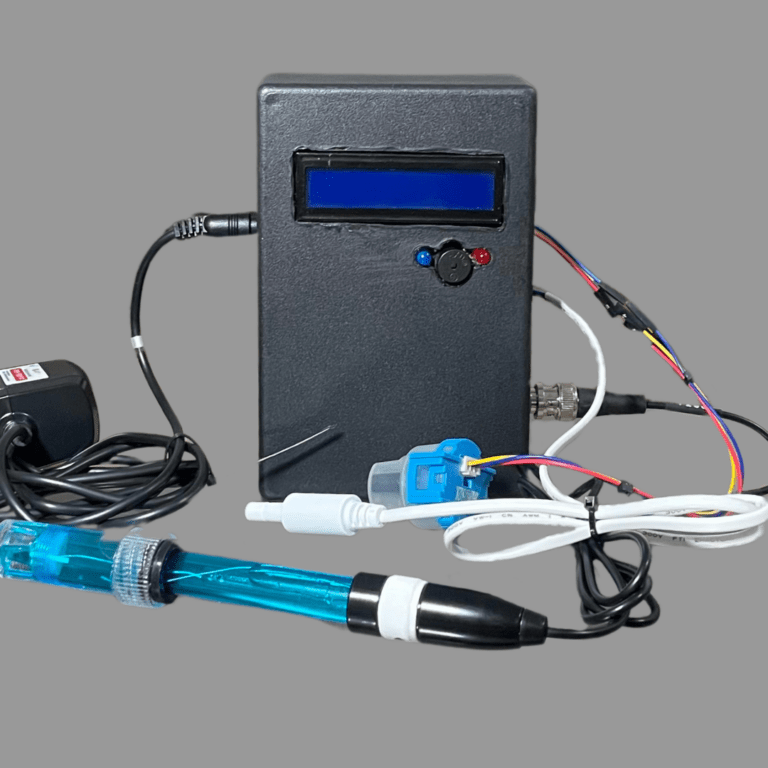

Wiring System

The wiring system image shows the electrical configuration of the ESP32-CAM based parking system, where two IMR18650 batteries (7.4V) function as the main power source, then the voltage is lowered to 5V through the LM7805 regulator equipped with a stabilization capacitor (3.3µF) to reduce noise and prevent oscillation, before finally being distributed to the ESP32-CAM module to power the OV5640 camera and processor during the operation of the automatic parking detection system.

AWS Cloud Architecture

This AWS cloud architecture leverages a VPC (Virtual Private Cloud) with a public subnet hosting the web application and EC2 instance (accessible via Elastic IP 54.86.233.199), while the private subnet (CIDR 10.0.0.0/16) securely isolates critical data components including AWS RDS Aurora (MySQL) for database storage, AWS Lambda for automated parking prediction, and EventBridge for scheduling triggers—enabling the ESP32-CAM devices to transmit real-time parking data via HTTP POST requests to the public endpoint http://54.85.233.199/live-parking-slot for centralized processing and analysis.

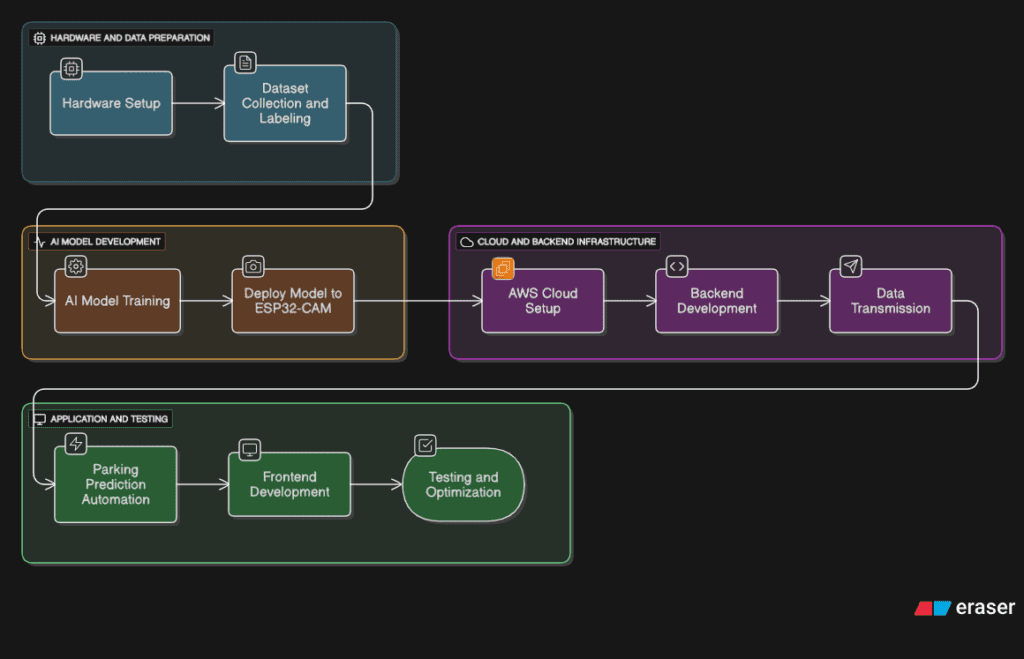

How to do it?

Hardware Setup

We kicked off the project by building a simple and stable hardware system using the ESP32-CAM module paired with a 5MP OV5640 camera. For power, we used dual IMR18650 batteries (7.4V), stabilized with an LM7805 voltage regulator and a 3.3μF capacitor to reduce noise. The output provides a clean 5V to the ESP32-CAM’s VCC and GND pins. This minimal setup ensures the ESP32 can consistently capture images without power fluctuations.Dataset Collection & Labeling

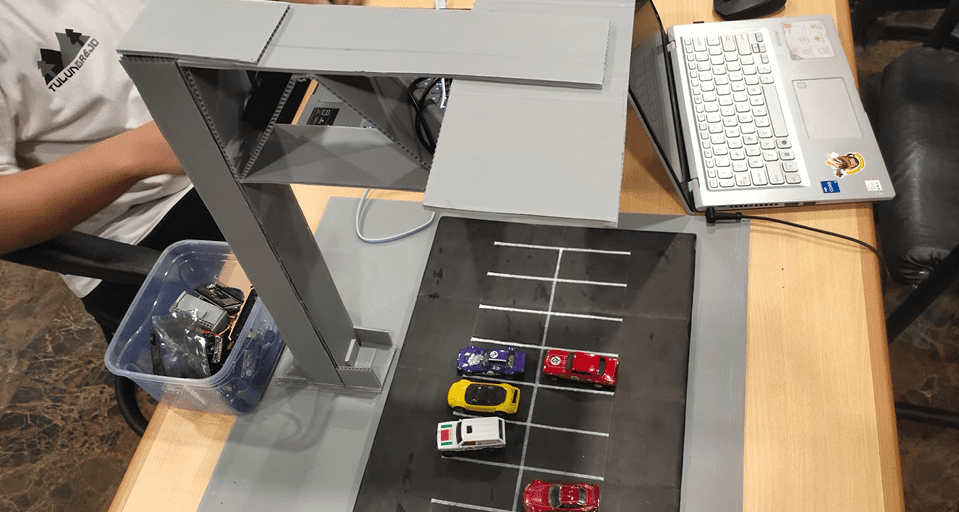

Images were captured using the OV5640 camera at 160×120 resolution in various lighting conditions (morning, afternoon, evening). A total of 181 images were collected. Each image was annotated using LabelImg with Pascal VOC format. Parking slots were labeled with bounding boxes as either empty (class 0) or occupied (class 1) — resulting in 2,896 labeled objects. This diverse dataset was key for a robust model.AI Model Training

The dataset was trained using Edge Impulse with FOMO MobileNetV2 0.35, a lightweight architecture ideal for edge deployment. Images were resized to 160×160 and normalized. Training ran for 60 epochs with a learning rate of 0.001 and batch size of 32. The data split was 81% for training and 19% for testing. The result? 97.14% accuracy and 98.7% F1-score on test data — more than good enough for real-time parking detection.Model Deployment to ESP32-CAM

After training, the model was exported in TensorFlow Lite (.tflite) format, then compiled to a C++ header (.h) using Edge Impulse’s EON Compiler. We flashed it to the ESP32-CAM using Arduino IDE and theesp32_cameraexample as base code. The model footprint is just 626.5KB and can infer a frame in around 2.3 seconds, directly on the ESP32 — no cloud needed!Cloud Infrastructure Setup

We built a secure and scalable cloud backend using AWS. The Laravel backend runs on EC2 (t2.micro, Ubuntu 22.04) in a public subnet with Elastic IP. MySQL 8.0 runs on Amazon RDS in a private subnet, protected by security groups. Prediction tasks are automated using AWS Lambda (Python), triggered by EventBridge every 30 days. It’s fully serverless and cost-efficient.Backend Development

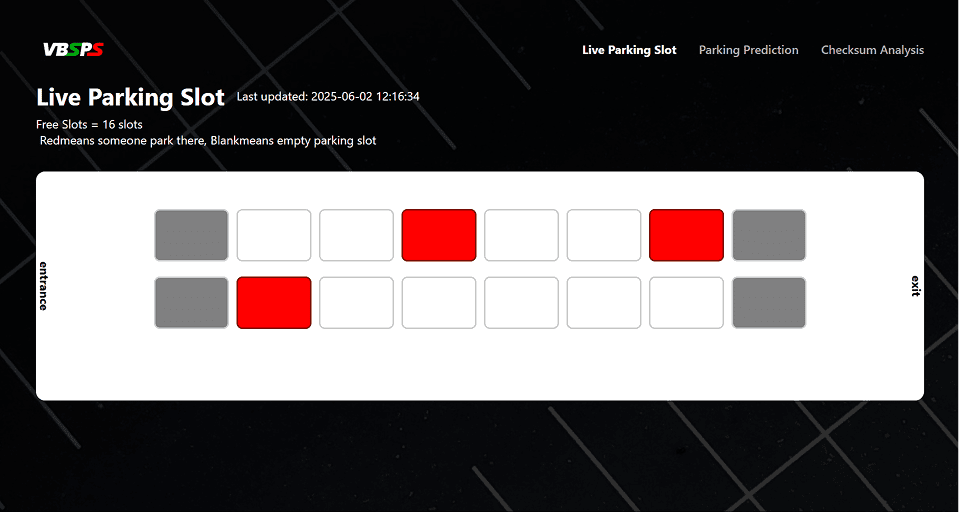

Our backend uses Laravel 10 (PHP 8.2), featuring a dedicated endpoint/live-parking-slotto receive ESP32-CAM data via POST. Data includes the timestamp, parking slot status, and CRC32 hash for integrity. It’s stored in thelive_parking_predictiontable. The API is designed with RESTful principles for seamless integration with any dashboard or frontend client.Data Transmission

Every 10 seconds, the ESP32-CAM sends a JSON payload to the server. This includes the real-time status of 16 parking slots in binary format (e.g.001011001...), a timestamp, and a checksum. The data is pushed tohttp://54.86.233.199/live-parking-slotusing the built-in HTTPClient library. It’s fast, light, and reliable — even on limited bandwidth.Automated Parking Forecasting

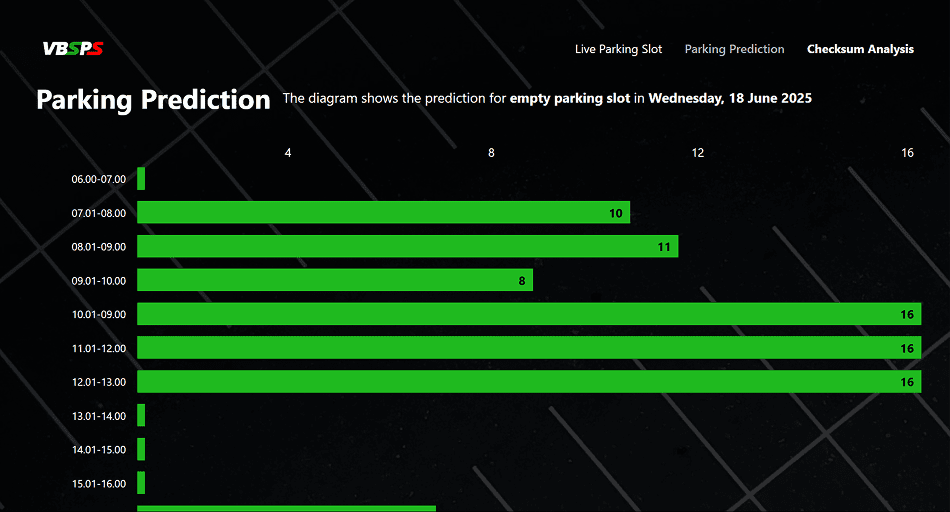

Using AWS Lambda, we fetch 30 days of historical parking data and train a Prophet time series model. The forecast includes hourly predictions from 6 AM to 9 PM and is saved as a CSV string in theparking_predictiontable. These predictions help users plan ahead and avoid full parking lots — powered entirely by serverless AI.Frontend Interface

The web interface is built with Laravel Blade and Tailwind CSS for a clean, responsive mobile UI. The real-time parking status is displayed in a 4×4 slot grid — with white for empty, red for occupied — updated every few seconds via AJAX. Users can also view hourly prediction charts using Chart.js to see expected parking load throughout the day.Testing & Optimization

We validated the system using over 50 test scenarios with toy cars under different lighting. The goal was at least 95% accuracy, and we exceeded that. End-to-end response time (from image capture to dashboard update) was under 8 seconds, measured with Wireshark. A 72-hour stress test using Locust (1,000 req/min) achieved 99.98% uptime, and memory optimization reduced RAM usage by 12% on the ESP32.

Conclusion and Recommendations

- Conclusion:

The Vision-Based Smart Parking System successfully addressed all research objectives. Utilizing cameras and a FOMO MobileNetV2 AI model, it achieved 97.14% detection accuracy for real-time parking slot availability. The model demonstrated excellent performance with a precision of 1.00 and an F1-score of 0.98. Data transmission reliability reached 94.924% using CRC-32C error checking on ESP32-CAM to AWS cloud communication, ensuring consistent data integrity. The functional prototype delivers an efficient embedded AI system, a web-based visualization interface, and parking density prediction, meeting goals of accuracy, reliability, and operational efficiency. Recommendations:

Future research should enhance on-device computational capacity, potentially through lighter pruned AI models or hardware upgrades (e.g., Raspberry Pi 4, higher-resolution cameras), to improve detection detail. Optimizing connectivity with offline buffering or alternative networks (e.g., LoRa, 4G/LTE, dual WiFi) would reduce WiFi dependency. Additionally, developing features for identifying specific available slots and enabling user selection via the interface would significantly improve system utility.

See More On Youtube

Contact us

GitHub Repository: https://github.com/maux-unix/vbsps

LinkedIn Profile:

– Faiz Ihsan Akram: https://www.linkedin.com/in/faiz-ihsan-akram-b5b83028a/

– Kurnita Ruci Widyana: https://www.linkedin.com/in/kurnita-ruci-widyana/

– Maulana Muhammad Ali: https://www.linkedin.com/in/maulana-ali-dev/

– Naufal Arsya Dinata: https://www.linkedin.com/in/naufal-arsya-dinata/

– Zaenal Arifin Radityo: https://www.linkedin.com/in/zaenalr/