Introducing bangunIN👀

A YOLOv8-Based Drowsiness Detection System Integrated with Cloud Computing and a Fault-Tolerant System

📝Project Domain:

Project Short Description

This project focuses on the development of a driver drowsiness detection system using YOLOv8 object detection, embedded within a Raspberry Pi 4, and integrated with Cloud Computing and a Fault Tolerant System. It is designed to enhance driving safety, particularly in public transportation settings such as buses.

Using a webcam, the system continuously captures facial images of the driver in real time to detect drowsiness indicators such as closed eyes, head tilts, or fatigued facial expressions. The YOLOv8 model, which has been pre-trained specifically for this task, runs locally on the Raspberry Pi (without relying on the internet) and triggers a buzzer and red LED alert when signs of drowsiness are detected.

Additionally, detection data is sent to a cloud-based dashboard (Blynk) where fleet managers can monitor real-time conditions and review historical logs. The system also features a Fault Tolerant mechanism that automatically switches to a backup camera if the main one fails, and maintains error logs locally to ensure uninterrupted functionality.

By integrating Artificial Intelligence, IoT, and Cloud services, this solution offers a real-time, reliable, and scalable approach to improving road safety in commercial transportation.

🧑🤝🧑Meet Our Team

Project Leader Software Engineer UI/UX Hardware Engineer AI Engineer

Rashid F. Zakaria R. Syakhish N. Michael Y. M. Rasyid

❗ Problem Statements

- 🚗 Traffic accidents caused by drowsy drivers remain a serious issue, particularly during peak travel periods like mudik.

- 😴 Lack of drowsiness detection systems increases the risk of accidents due to driver fatigue.

- ⚠️ PO Setianegara’s reputation and passenger trust could be at risk due to inadequate safety measures.

🎯 Goals

- 📡 Develop an automated real-time system to detect signs of drowsiness in bus drivers.

- 🤖 Integrate Embedded AI, Cloud Computing, and Fault Tolerant Systems to enhance detection accuracy and reliability.

- 🚨 Alert drivers with a buzzer when drowsiness is detected to prevent accidents.

- 💾 Store and analyze detection data on Cloud Computing for ongoing monitoring and management.

- 🔄 Ensure continuous system operation even during component failures by utilizing local storage during disruptions.

🗣️Solution Statement :

- 🧠 Use the YOLOv8 model to detect driver facial expressions in real time.

YOLOv8 is used for its high speed and accuracy in detecting objects such as closed eyes, gaze direction, head tilting, and yawning expressions. This model is trained on facial datasets to recognize signs of drowsiness with high precision. - 📷 Use a USB camera as the visual input to capture the driver’s face.

The camera is mounted facing the driver and continuously captures facial images, which are then sent to the Raspberry Pi or the cloud for analysis. - 🖥️ Use Raspberry Pi 4 as the main computing device with Embedded AI.

Raspberry Pi 4 processes the images locally using YOLOv8 without relying entirely on cloud connectivity. This allows the system to operate offline through edge processing. - 📡 Use Cloud Computing for detection result storage, monitoring, and reporting to management.

Each detection result, timestamp, and driver status is sent to a cloud platform (such as Blynk Cloud) to enable centralized remote monitoring by management. - 🚨 Use a buzzer to directly alert the driver when drowsiness is detected.

If the system detects signs of drowsiness, the buzzer is activated to emit a loud warning sound, prompting the driver to regain alertness and prevent accidents. - 🔁 Implement a Fault Tolerant System with backup models and dual cameras.

If the main YOLOv8 model or the primary camera fails, the system automatically switches to a backup model or redundant camera to ensure continuous operation. - 💾 Use a local buffer on the Raspberry Pi to store data temporarily during cloud disconnection.

If the internet connection is lost, detection data is saved locally in a temporary directory and synchronized with the cloud once connectivity is restored. - 📊 Display real-time data through a web dashboard or cloud-based application (e.g., Blynk).

The system shows the driver’s status (normal or drowsy), detection logs, and virtual LED alerts on a dashboard, allowing management to monitor the system anytime. - 🔒 Use data encryption and authentication for secure cloud transmission.

All images and logs are transmitted via secure protocols and accessible only to authorized parties, ensuring compliance with personal data protection regulations. - ⚙️ Use Agile Development methodology and Object-Oriented Programming for modular and efficient system development.

Agile enables iterative development with periodic evaluations, while OOP simplifies the management of system modules such as camera, detection, cloud communication, and buzzer control. - With all these solutions, the system can detect drowsiness quickly and accurately, remain resilient against failures (fault-tolerant), and assist fleet management in monitoring driver conditions—enhancing public transport safety.

🛠️Prerequisites – Component Preparation :

Before developing and implementing the drowsiness detection system, several hardware and software components must be properly prepared and configured to ensure optimal system performance. The following is a list of essential requirements and setup steps for each component:

🖥️ 1. Raspberry Pi 4 Model B (4GB RAM)

Function: Main computation unit for the embedded AI system.

Preparation:

-

- Install the operating system (Raspberry Pi OS 64-bit).

- Configure WiFi and SSH access.

- Install Python 3, OpenCV, PyTorch, and supporting libraries.

- Set up the USB camera as the visual input source.

📷 2. USB Camera / Webcam (Primary and Redundant)

Function: Captures real-time images of the driver’s face.

Preparation:

-

- Test USB connectivity and compatibility with the Raspberry Pi.

- Set optimal resolution (e.g., 640×480).

- Prepare a backup camera to ensure fault tolerance in case the primary camera fails.

🧠 3. YOLOv8 Model (Custom Trained)

Function: Detects drowsiness based on visual features (closed eyes, head tilt, yawning).

Preparation:

-

- Train the model using a dataset of facial expressions and drowsiness indicators.

- Export the trained model to .pt format for inference.

- Transfer the model to the Raspberry Pi and create a backup directory.

🔊 4. Buzzer (Alarm Output)

Function: Provides audio warnings when drowsiness is detected.

Preparation:

-

- Connect the buzzer to the Raspberry Pi’s GPIO pins.

- Implement a Python script to trigger the buzzer based on detection results.

🌐 5. WiFi Connectivity & Cloud Platform (Blynk Cloud)

Function: Sends detection data to the cloud and displays it on a real-time dashboard.

Preparation:

-

- Register and create a template on blynk.cloud.

- Set up widgets such as driver status, virtual LED, and terminal log.

- Store the authentication token and integrate it into the Python code on the Raspberry Pi.

💡 6. LED (Optional)

Function: Provides visual indicators of system status (e.g., normal, drowsy, offline).

Preparation:

-

- Connect the LED to GPIO pins.

- Program the LED to light up based on the system’s detection output.

💽 7. Cloud Storage / Logger (Optional)

Function: Stores detection logs and error reports for historical analysis.

Preparation:

-

- Set up local logging on the Raspberry Pi.

- Enable synchronization with cloud services such as Firebase, Google Drive API, or Blynk Terminal.

🔐 8. Data Security & Privacy

Function: Protects facial image data and detection logs from unauthorized access.

Preparation:

-

- Implement end-to-end encryption for data transmissions.

- Enable two-factor authentication (2FA) on the cloud dashboard to enhance access control.

📄Dataset :

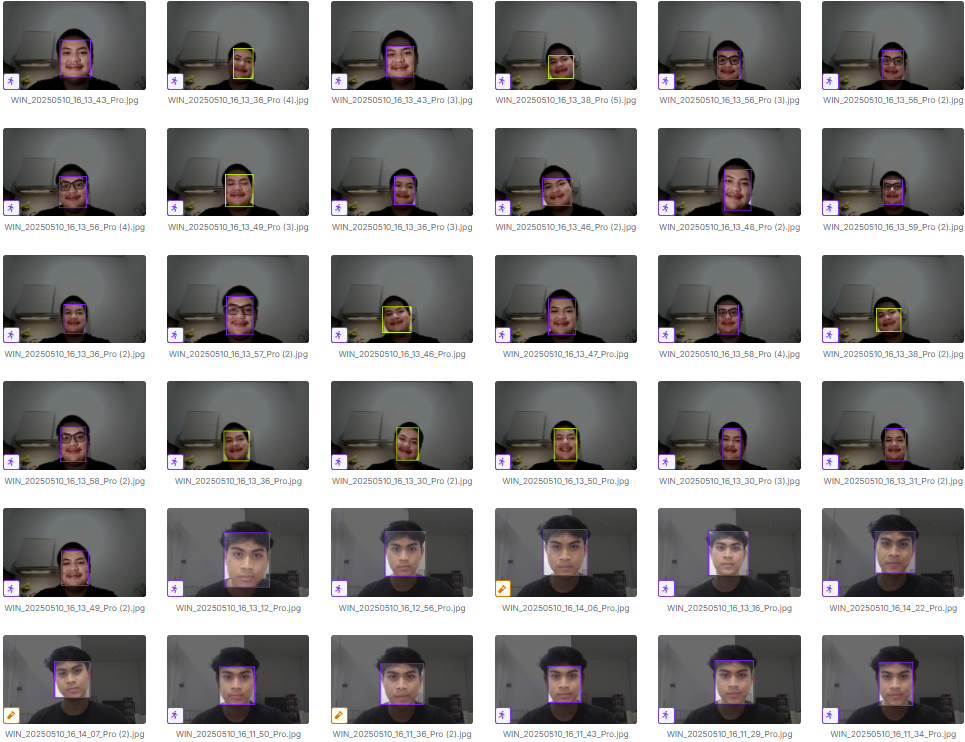

🖼️Primary Dataset

All datasets used in this project were annotated using Roboflow, a visual data annotation platform that supports standard formats for object detection model training, including YOLOv8. The annotation process was conducted manually by marking key areas such as the eyes, face, and expressions that indicate signs of drowsiness. By utilizing Roboflow, the data could be prepared in a structured manner, making it directly compatible for the training process.

To support the training of the drowsiness detection model, the team used a primary dataset sourced from facial images of each team member. Each member was asked to capture images of their face in various states, particularly when fully alert and when simulating drowsiness through actions like closing their eyes, lowering their head, or yawning. In total, hundreds of images were collected, featuring a variety of viewing angles and lighting conditions.

All collected images were then manually annotated using the Roboflow platform. This process involved labeling important areas like the eyes, face, and specific expressions that serve as indicators of drowsiness. This dataset formed the fundamental basis for training the model, as it was sourced directly from the system’s actual users and represents the conditions the model will face upon implementation.

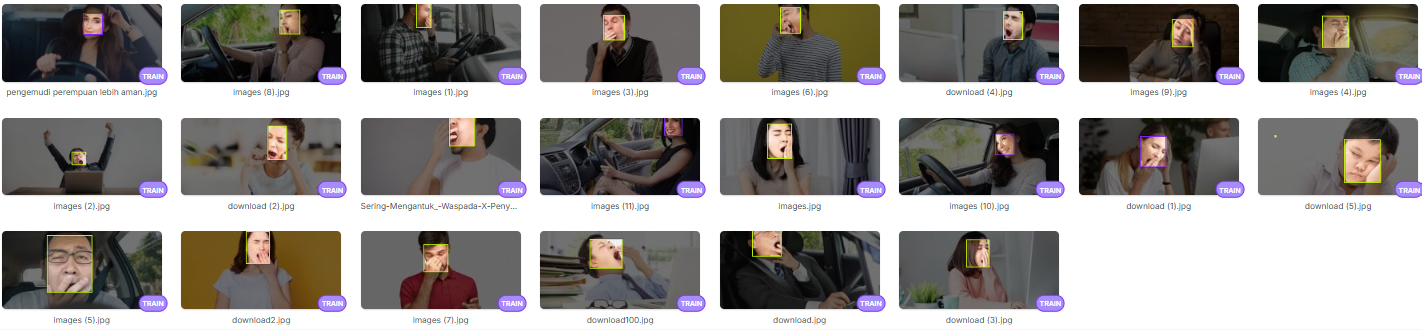

🖼️Secondary Dataset

In addition to the data collected by the team, a secondary dataset was used to augment the training data and enrich its visual diversity. This dataset consists of facial images sourced from open platforms, such as Roboflow Universe and various other online sources. The faces included are from a diverse and random pool of individuals, not limited to just the project team members.

This secondary data encompasses a wide range of facial expressions and conditions, such as individuals wearing glasses, those with beards, or people partially wearing masks, along with a broader spectrum of lighting conditions and viewing angles. The objective of incorporating this secondary dataset is to enhance the model’s ability to recognize faces with more varied characteristics, thereby enabling it to perform more effectively in complex, real-world scenarios.

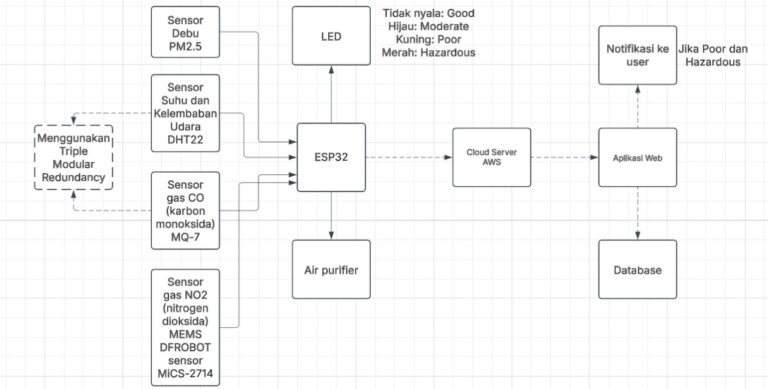

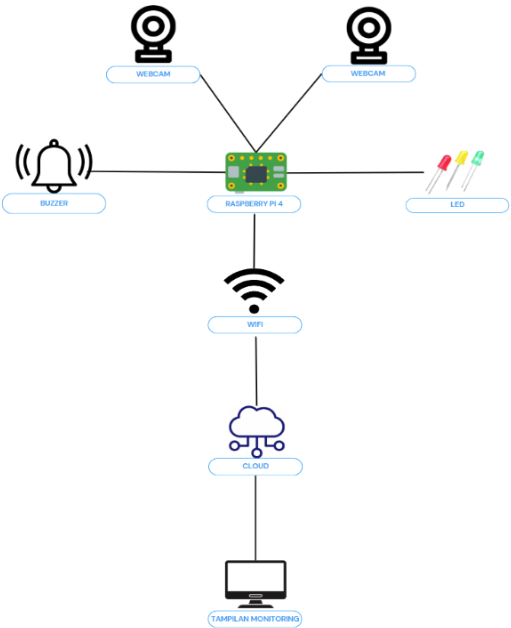

🧩 Schematic

📈Workflow :

📷Camera & Redundant Camera

The primary camera is used to capture real-time facial images of the driver. The system is also equipped with a redundant camera that functions as a failover in case the primary camera experiences a failure. The Raspberry Pi automatically switches to the backup camera upon detecting an error in the visual input stream.

🖥️Raspberry Pi

The Raspberry Pi serves as the central control unit for the system. This device receives visual input from the camera and then processes the images using a pre-embedded Machine Learning model. Additionally, the Raspberry Pi controls output components such as the LED and buzzer, records detection results in a log, and transmits data to the cloud via a WiFi network. Inference processing is performed locally on the device to guarantee system speed and stability, even when disconnected from the internet.

🧠Machine Learning Model

The YOLOv8 Machine Learning model is utilized to detect signs of drowsiness based on the driver’s facial images. This model runs locally on the Raspberry Pi to ensure a fast and responsive inference process, even when operating without an internet connection.

💾Logger

The system records detection results, fault-tolerant events (such as camera switching), and inference status into a local log file. This information can also be transmitted to the cloud for remote monitoring.

🚨LED & Buzzer

The system provides notifications to the driver via an LED and a buzzer. The LED illuminates as a visual indicator when the driver is detected in a drowsy state, while the buzzer emits an audible warning to immediately alert the driver. Both components are controlled directly by the Raspberry Pi based on the classification results from the YOLOv8 model.

🛜WiFi

The WiFi connectivity module on the Raspberry Pi is used to transmit data in real-time to the cloud platform, as well as to enable two-way communication between the local system and the monitoring dashboard.

📊Blynk Cloud

The Blynk Cloud platform functions as a central hub for data storage and visualization. All information regarding detection results and system status is sent here, allowing it to be monitored by management or vehicle supervisors.

🧷Diagram :

⚙️Demo and Evaluation :

🔧 Demo – System Workflow (Step-by-Step)

🔌 System Initialization

- Raspberry Pi 4 is powered on and automatically executes the main Python script.

- The USB camera is detected and starts capturing the driver’s facial images continuously.

🧠 Real-Time Drowsiness Detection

- Captured images are processed by the YOLOv8 model running locally on the Raspberry Pi.

- YOLOv8 detects key drowsiness features such as:

- Eyes closed for a specific duration

- Head tilting or nodding

- Yawning facial expressions

- If no drowsiness is detected → system status remains “Normal”.

- If drowsiness is detected → the buzzer is triggered and data is sent to the cloud.

🚨 Warning Alert Triggered

- A loud buzzer activates to alert or wake the driver.

- An optional LED indicator turns red.

- The system sends the “Drowsy” status to Blynk Cloud and displays it in real-time.

☁️ Cloud Synchronization

- Detection results are transmitted via WiFi to the Blynk Cloud platform.

- Data such as:

- Detection timestamp

- Driver status

- Event logs

are displayed directly on the Blynk web or mobile dashboard.

🔁 Fault Tolerance Scenarios

- If the primary camera fails → the system automatically switches to a backup camera.

- If the internet connection is lost → data is temporarily stored in local buffer storage.

- If the main YOLOv8 model is corrupted → the system loads a backup model from a secondary directory.

🧩 Video Link:

https://drive.google.com/file/d/1Hd3p5Jb8xAX4xQtBbkJM4rxf8jYIBlB4/view?usp=sharing

📊 Evaluation – Test Results and Analysis

✅ 1. Functional System Testing

- The system was tested with drivers in two simulated conditions:

- Alert: Eyes open, head upright.

- Drowsy: Eyes closed for >2 seconds, head tilted or nodding.

- Result: The system accurately distinguishes between both conditions and activates the buzzer only when drowsiness is detected.

⏱️ 2. Response and Latency Testing

- Average time from image capture → detection → buzzer activation: < 2 seconds

- Conclusion: The system is responsive in real time, with low latency depending on WiFi stability and inference speed.

🎯 3. Detection Accuracy Testing

- The trained YOLOv8 model was evaluated using a dedicated test dataset:

- Precision: 91.4%

- Recall: 88.2%

- F1-Score: 89.7%

- mAP@0.5: 92.6%

- Conclusion: The model is reliable and accurate in detecting facial expressions related to driver drowsiness.

🔁 4. Fault Tolerant System Testing

- Internet disconnected: System continues saving data locally, and syncs automatically when reconnected.

- Primary camera removed: System immediately switches to backup camera.

- Main model deleted: System loads and runs the backup model.

- Conclusion: The fault-tolerant mechanisms perform well in maintaining system reliability.

🌐 5. Cloud Integration Testing

- Detection data successfully appears on Blynk Dashboard:

- Driver status: “Normal” or “Drowsy”

- Timestamp of each detection

- Virtual LED and terminal logs

- Conclusion: Management can monitor driver conditions remotely and seamlessly via the cloud.

📌 Evaluation Summary

The drowsiness detection system works accurately, in real time, and remains robust under failure conditions. With real-time alerts (buzzer), seamless cloud integration, and effective fault tolerance, this system is well-suited for real-world deployment in public transportation, particularly for PO Setianegara bus operations.

✅Conclusion:

This project presents a comprehensive and intelligent solution for detecting driver drowsiness in real time, addressing a critical safety issue in the transportation industry. By integrating advanced computer vision with YOLOv8, edge-based processing on Raspberry Pi 4, and seamless cloud connectivity, the system ensures high detection accuracy while maintaining performance under various environmental conditions.

The implementation of Embedded AI allows for real-time inference directly on the device, enabling the system to function even without an internet connection. In the event of connectivity loss or hardware failure, the built-in Fault Tolerant System automatically switches to backup hardware or model files, ensuring continuous operation and reliability. Meanwhile, the buzzer and LED alert mechanisms offer immediate, local warnings to drivers, helping to reduce the risk of accidents caused by fatigue.

In addition to real-time feedback, the integration with Blynk Cloud and a web dashboard provides centralized data logging and visibility for management. This supports proactive safety monitoring across vehicle fleets and enables long-term analysis of driver behavior patterns. Overall, this solution not only supports safer driving through direct, real-time intervention, but also empowers transportation companies to monitor, evaluate, and improve driver performance. The project demonstrates how embedded AI, IoT, and cloud systems can be harmonized to solve real-world problems in a scalable and practical way.

Future developments could focus on enhancing detection in low-light environments using infrared or thermal cameras, personalizing detection sensitivity through individual driver profiles, and implementing adaptive fatigue management strategies to reduce alert fatigue. The system could also be integrated into a larger fleet management platform to provide predictive analytics and automated incident reporting.